There’s a quiet but growing unease among developers who use AI tools to help them write code. It’s not about whether AI is useful, it absolutely is. It’s about something deeper, something that most discussions skip over: consistency.

In software development, consistency is sacred. It’s what separates a reliable system from a chaotic one. It’s the reason why design patterns exist, why teams follow style guides, and why entire frameworks evolve around conventions. Predictability is the lifeblood of maintainability. When you look at a codebase six months after writing it, you expect to see logic that flows in a familiar, uniform rhythm. Every developer on the team should be able to anticipate how a module behaves before even opening it. That’s how scaling works, not just in infrastructure, but in human understanding.

AI, however, doesn’t work that way.

When you ask an AI model to write a function, it doesn’t have a single mental model of your software’s architecture. It generates what looks right at the moment, based on probabilities learned from millions of code samples. Ask the same model to solve the same problem again, and it might return a completely different approach, equally valid, equally functional, but structurally unrelated. One moment you get a recursive solution, another moment an iterative one. Sometimes it favors hooks, sometimes classes. To the human eye, both might look fine. To a growing codebase, this is the beginning of entropy.

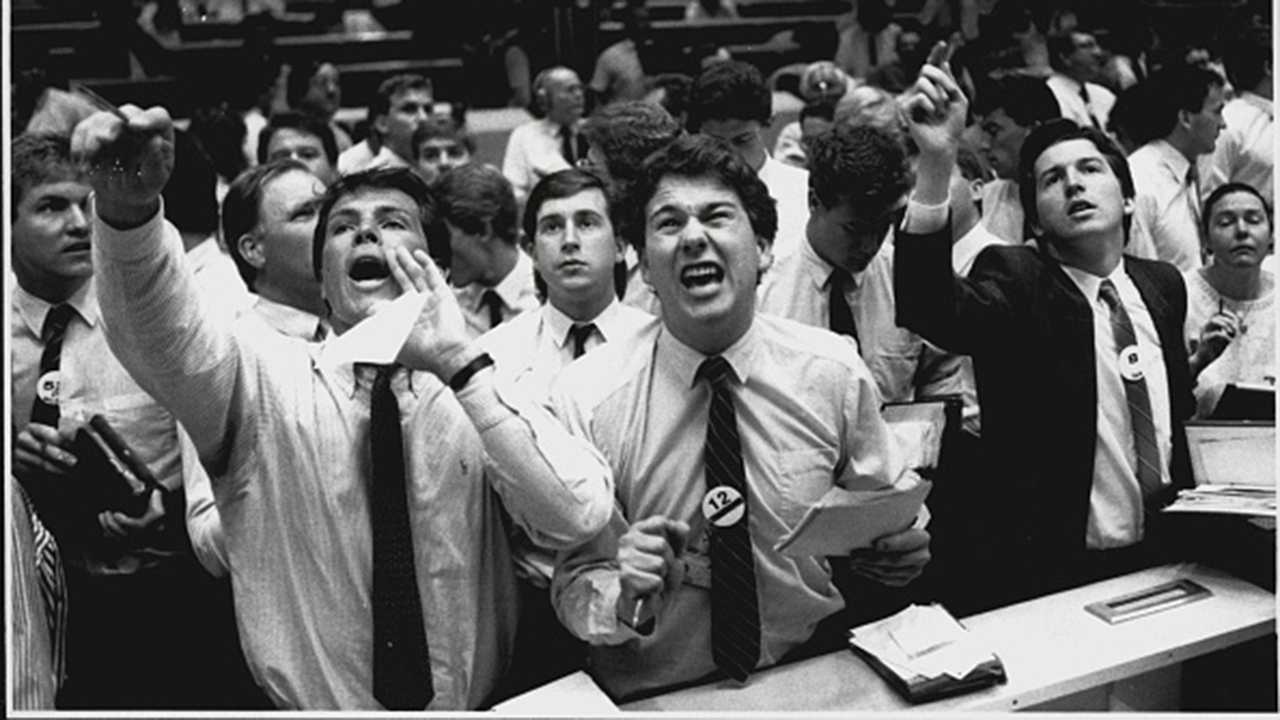

The Cost of Variability

In the world of AI-assisted coding, variability is often celebrated as creativity. We marvel at how AI can “think differently” or come up with a “fresh” way to do something. But in production software, creativity is a double-edged sword. You don’t want every developer, human or machine, reinventing how to handle a simple data transformation or error message. You want one solid, agreed-upon way that works every time. The moment you allow multiple equally correct approaches to coexist, you introduce fragmentation.

This fragmentation doesn’t announce itself immediately. It creeps in quietly. The next developer reading the code has to stop and think, “Wait, why was it done this way here, but differently over there?” Soon you have multiple paradigms living inside the same codebase, and debugging becomes a game of archaeology.

Predictability is the True Measure of Quality

There’s an old saying among developers: “Code should be boring.” It’s a compliment, not an insult. It means your code does exactly what others expect it to do. It behaves predictably. You can refactor it without fear because it follows the same internal logic throughout. In that sense, predictability is the foundation of quality.

But AI-generated code tends to be exciting, it surprises you. And that’s precisely the problem. In a creative domain, surprise is innovation. In software, surprise is a bug waiting to happen.

When AI tools generate different versions of similar logic, developers lose the single mental model that makes a system coherent. Predictability breaks down. Tests might still pass, but the underlying architecture begins to drift apart. It’s like having multiple dialects evolve inside the same language, communication remains possible, but understanding takes more effort every time.

The Path Forward

AI can suggest, but humans must standardize. We need to teach our tools what our version of consistency looks like, perhaps by fine-tuning models on internal codebases or constraining AI to work within established frameworks. Until then, the idea that AI can autonomously write entire systems should be approached with caution. Because no matter how advanced it becomes, AI can’t predictably reproduce intent, only syntax.

Software is not just about making things work. It’s about making them work the same way, every time. And until AI learns to care about that, true reliability will remain a human craft.

Opinionated Code

Opinionated Code