When traversing the world of modern technology, one cannot ignore the elephant in the chat room: ChatGPT. This artificial intelligence marvel has cemented its place as the go-to oracle for our digital inquiries. But to grasp its significance fully, we must also consider the interplay with other hot-button topics, notably the quaintly-named “Net Neutrality.” This legal right ensures that the Internet remains a level playing field for all hazardous cats and mustachioed mice , at least in theory. The dream is an unfettered internet, free from the sinister clutch of favoritism by internet service providers, delivering every soul the same slice of the information pie, without sprinkles of bias muddled in marketing agendas.

Fast forward to today, and ChatGPT has seamlessly slipped into our lives as an algorithmic omniscient, equipped with the noble pursuit of neutrality in its results, relying on sophisticated learning methods. Yet don't let its stoic demeanor fool you. Beneath the surface lies a myriad of intricate algorithms, each enhanced by parameters as numerous as a kitten's lives. Now, you might assume that such a complex system produces outputs untouched by human prejudice. A credible black box, they say: yet the claim is as misleading as your uncle's fishing stories. Let's put on our thinking caps for a moment and contemplate: What truly constitutes a "right" result, and who gets to judge? That’s one moral maze that even Sherlock would struggle to navigate.

Language models, particularly those like ChatGPT, are not mere vending machines spitting out facts with the click of a button. They are finely tuned musical instruments, susceptible to the slightest alteration of parameters: like temperature, not that of your morning coffee but rather an abstract concept that could portray how fiery a response can get. Increase the “temperature” dial, and our friendly chatbot could soon embrace a rather extremist stance (Nazi? Really?). Dial it down, and the vibe shifts to a bland monotone, providing uninspired responses akin to your high school geography teacher. Thus, amidst the myriad configurations lies a critical balancing act between engagement and inoffensiveness, which would make any tightrope walker proud.

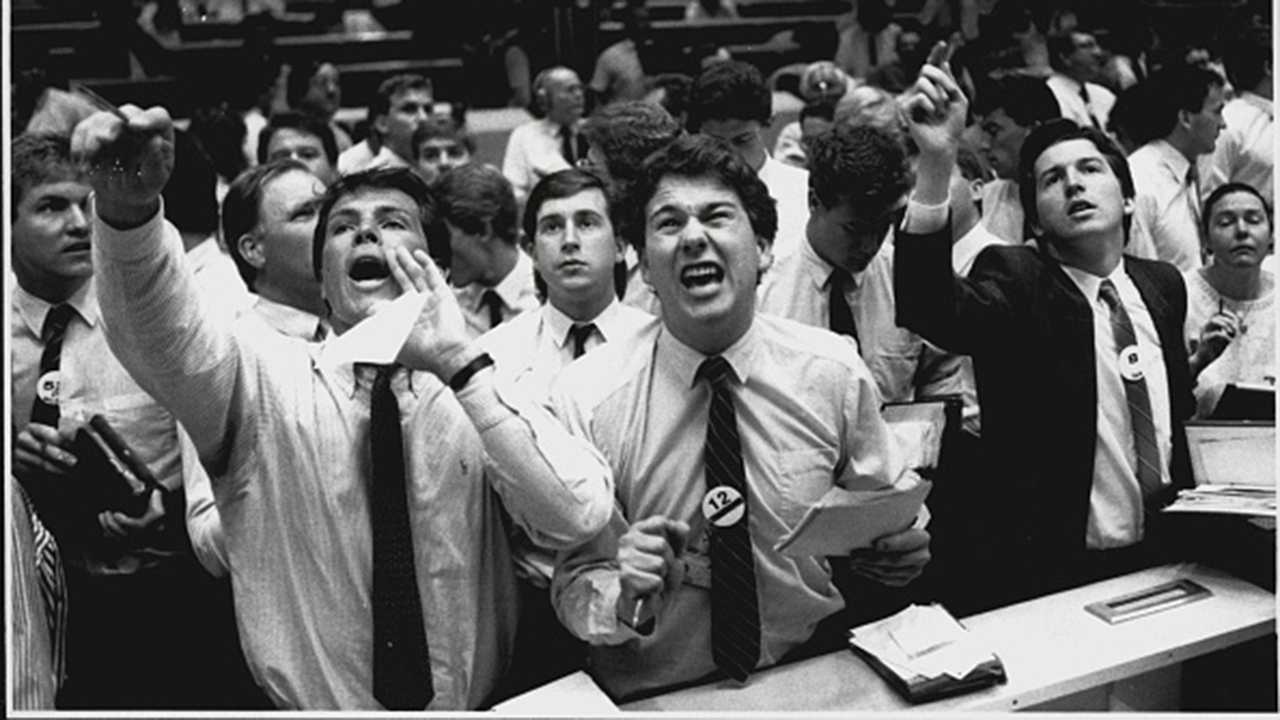

We’ve recently witnessed the evolution of these tools pushing themselves not just as information aggregators but as essential facilitators in various industries, effectively charging a toll to wagon-pullers like researchers who approach them for reliable data. Search engines, once synonymous with an avalanche of sponsored links, now find themselves integrating chat functions as standard. It’s a techno-marketing sleight of hand, sly enough to make magicians green with envy, streaming information from the cloud and serving it up as gourmet content. But here’s the kicker: this seems just as designed to maintain control as it is to serve quality information.

Now, hold your horses—let's dive into a pressing myth: the notion that GPTs are harbingers of job loss. This narrative often occupies the summit of technophobe discussions, exuding a whiff of old-world fear. Sure, companies aggressively lap up automated processes like a thirsty dog at a watering hole, especially after realizing that robots don’t require paid sick leave or vacation days. However, this perception overlooks an essential nuance: generative AI isn’t ready to go full Terminator on human workers just yet. Instead, it must operate within a kaleidoscope of political correctness, meticulously avoiding divisive topics like a cat dodging a bath. Just imagine the chaos if ChatGPT were posed a question regarding the complexities of the Israeli-Palestinian conflict; the resultant response could be so bland it might as well be served on a white plate.

Some skeptics suggest breathing easy; maybe we’re witnessing the rise of yet another techno bubble, destined to burst spectacularly like that infamous crypto bubble or the Metaverse hype train. Others view ChatGPT as a slick tool, emblematic of excessive marketing drive more than technological breakthrough. Yet there's an undercurrent of concern: what happens when this dazzling power falls into the wrong hands? Could we one day find ourselves entrusting a tool that wields immeasurable influence as it shapes perceptions, behaviors, and ideologies on topics about which we now skim the surface?

In a world progressively leaning on technology to fill in the blanks of our deeper historical narratives, we may be paving the way for a reality where a computational deity holds our attention. This omniscient entity could serve as a crutch for discerning credible sources among the vast sea of misinformation. If we’re not careful, we could unknowingly cultivate an environment where radiantly packaged myths and sensationalized truths become our new gospel.

Thus, navigating the wonders of generative AI prompts a delicate dance. It beckons us to ask not just what is being said, but who is deciding what's worthy of being said, and the implications of readily accepting such guidance. So, sharpen your wits. after all, in this epoch of technical marvels, having all the right questions may just be more crucial than having the right answers.

Opinionated Code

Opinionated Code